For years, AI hallucinations were brushed off as harmless quirks—funny mistakes, wrong answers, or awkward fabrications that users learned to double-check. In 2026, that attitude has collapsed. AI hallucination lawsuits are no longer hypothetical. They are real, active, and growing, as businesses and individuals face tangible harm caused by confidently wrong AI outputs.

What changed is not that AI started hallucinating—it always did. What changed is where AI is being used. Legal advice, financial decisions, medical summaries, hiring, journalism, and customer support now rely on AI outputs at scale. When hallucinations move from chat experiments into real-world consequences, courts inevitably follow.

Why AI Hallucination Lawsuits Are Emerging Now

The rise of AI hallucination lawsuits is driven by deployment, not innovation. AI systems are now embedded in workflows where errors cause measurable damage.

Key triggers include:

• AI-generated legal citations that don’t exist

• Financial summaries with fabricated data

• Medical or compliance advice that is factually wrong

• Automated reports used without human verification

When decisions are made on hallucinated information, accountability becomes unavoidable.

What Counts as an AI Hallucination in Legal Terms

In courtrooms, hallucinations aren’t judged as “bugs”—they’re judged as misinformation.

Legally relevant hallucinations include:

• Fabricated sources presented as real

• Incorrect facts delivered with high confidence

• Non-existent laws, cases, or policies

• False claims framed as verified data

The issue isn’t that AI makes mistakes. It’s that it presents them as truth.

Who Is Being Sued in These Cases

One of the most complex aspects of AI hallucination lawsuits is responsibility. Lawsuits don’t target “the AI.” They target humans and organizations.

Common defendants include:

• Companies deploying AI tools

• SaaS platforms selling AI-powered features

• Firms relying on AI-generated outputs without review

• Professionals who passed AI output as their own

Courts are asking a simple question: Who had the duty to verify?

Why Disclaimers Are No Longer Enough

For years, AI providers relied on disclaimers like “for informational purposes only.” That shield is weakening fast.

Why disclaimers fail in practice:

• Users reasonably trust professional tools

• Outputs are framed as authoritative

• Disclaimers are buried in fine print

• Businesses market AI as reliable

In AI hallucination lawsuits, courts are examining how the tool was sold, not just what the disclaimer said.

How Misinformation Turns Into Legal Damage

Hallucinations become lawsuits when they cause harm.

Common damage pathways:

• Financial losses from wrong decisions

• Reputational harm from false reports

• Regulatory violations based on bad data

• Legal penalties triggered by incorrect filings

Once harm is provable, intent becomes irrelevant. Impact is what matters.

Why Courts Are Taking These Cases Seriously

Judges are not debating whether AI is impressive. They’re assessing duty of care.

Courts focus on:

• Reasonable expectations of accuracy

• Human oversight requirements

• Industry standards

• Foreseeability of errors

The message is clear: using AI does not remove responsibility—it redistributes it.

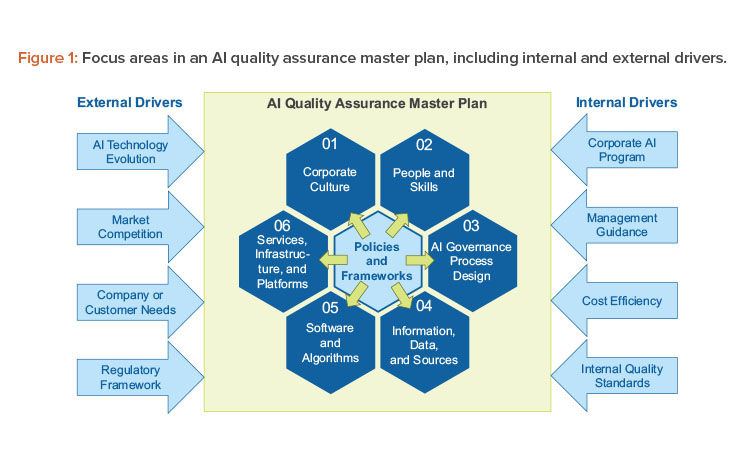

How Companies Are Quietly Changing AI Use

In response to AI hallucination lawsuits, many companies are changing policies—quietly.

New internal rules include:

• Mandatory human review of AI outputs

• Restricted use in legal and financial contexts

• Logging and audit trails for AI decisions

• Clear labeling of AI-generated content

Risk departments are now as involved as innovation teams.

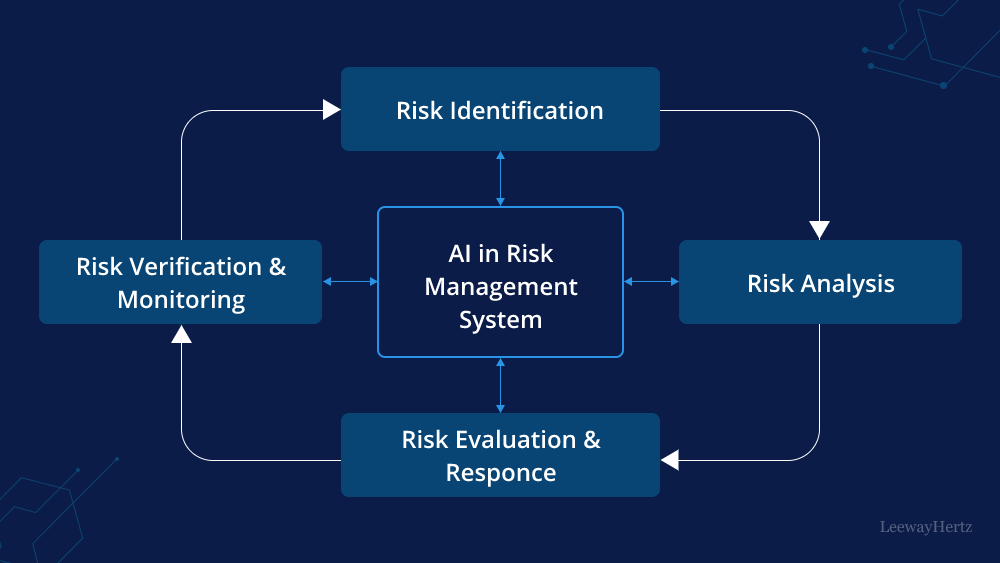

Why “Human in the Loop” Is Becoming Mandatory

The phrase “human in the loop” has shifted from best practice to legal necessity.

Why it matters:

• Humans are expected to catch obvious errors

• Oversight proves due diligence

• Review reduces liability exposure

• Courts favor layered decision-making

In AI hallucination lawsuits, lack of human review is often the weakest defense point.

What This Means for AI Product Builders

For AI builders, the era of “ship first, fix later” is ending.

Builders must now consider:

• Accuracy claims in marketing

• Clear limits of use

• Safety rails for high-risk outputs

• Customer education on verification

Ignoring these invites legal scrutiny—not growth.

Conclusion

AI hallucination lawsuits mark a turning point. AI errors are no longer amusing mistakes—they’re legal liabilities. As AI moves deeper into real-world decision-making, courts are demanding the same standards of care expected of humans and institutions.

The future of AI won’t be decided by smarter models alone—but by how responsibly they are deployed. The joke phase is over. Accountability has arrived.

FAQs

What are AI hallucination lawsuits?

Legal cases where harm was caused by false or fabricated AI-generated information.

Who is liable for AI hallucinations?

Usually the company or professional who deployed or relied on the AI output without proper verification.

Do disclaimers protect AI companies?

Increasingly, no—especially if the tool is marketed as reliable or professional-grade.

Are AI hallucinations considered negligence?

They can be, if reasonable safeguards and human oversight were missing.

Will these lawsuits increase in the future?

Yes. As AI adoption grows, legal scrutiny will grow alongside it.

Click here to know more.